6 Key Trends shaping the Modern Data Stack in 2023

Our learnings & observations from the latest trends in the data stack.

Every modern organization today needs tools and technologies to collect, process, and analyze data, and to transform it into a useful form. Traditional data tools with inflexible monolithic architectures are suited for generic use cases and small quantities of low-velocity data. Modern organizations need better tools and more agile and scalable data architectures to manage and analyze vast quantities of data, keep pace with high data-generation velocities, and democratize data access for even complex use cases.

The term “modern data stack” has emerged very recently owing to the rise of the internet, cloud computing, software as a service (SaaS), and advanced data storage/ analytical systems (warehouses, lakes etc.). Thanks to these innovations; on-premise, monolithic data systems have now evolved into cloud-first microservices-driven systems that are creating an ever-accelerating flywheel of the data quantity and quality in the modern enterprise.

The ever-evolving data infrastructure combined with the advanced use-cases emerging have led to the unbundling of the modern data stack into specialized mission-critical tools across the data lifecycle as highlighted below (respresentative and non-exhaustive landscape)

Companies building products in the data stack have raised more than $220B in the last 4 years alone and the space has seen multiple decacorns reaching $500M ARR at astronomical rates. Gartner estimates that data management is the largest infrastructure market with almost 28% of the market at $110B in 2022 and expected to grow to $200B by 2026 while representing 32% of the overall infrastructure market then.

Of course, while modern data tools aren’t a silver bullet for all data needs, it does create numerous opportunities to address many of the challenges associated with traditional data tools, particularly around processing speeds, pipeline complexity, access, manual processes, and scalability. Below, we highlight six trends that any data founder, operator, or enthusiast should look out for in 2023 and beyond.

1. Data warehouses/ lakes taking the central stage in the data/ analytics stack

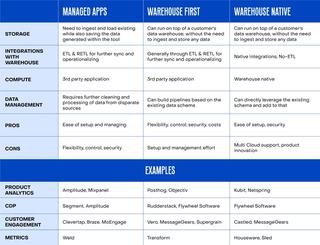

Reverse ETL becoming mainstream and the rise of Warehouse native Apps as first party data activation becomes critical

With the warehouse becoming the central piece for analytics data, an increasing number of organizations are focussing on activating and operationalizing it by bringing everything together in the warehouse, enriching data with more context and leveraging the same in the downstream SaaS applications.

Today this happens through reverse ETL solutions that makes operational analytics more accessible for business tools. They support downstream data transformation, data access, activation, and synchronization across departments by loading data from data warehouses into various SaaS-based business applications. With the increased adoption of warehouses and lakes, we can expect that reverse ETL to become mainstream and probably even become a standard inbuilt activation module for most SaaS applications.

We’re already seeing the rise of warehouse-native apps and frameworks that provide direct access to data sources from within the warehouse itself. Building directly on the warehouse will allow teams to use the same schema in their controlled & safe environment and leverage on-warehouse compute instead of investing in another data integration tool. Leveraging a warehouse-first architecture allows them to get the features they need to build and activate a real-time, unified source of truth for customer data without having to store any data with a third-party vendor or suffer from the vendor’s technological limitations.

Snowflake recently announced its Native application framework using which application providers can use Snowflake core functionalities to build their applications, then distribute and monetize them in Snowflake Marketplace and deploy them directly inside a customer’s Snowflake account. Application providers get immediate exposure to thousands of Snowflake customers worldwide, while customers keep their data centralized and can significantly simplify their application procurement process. Application builders can sell their native apps to the Snowflake existing customer base while also extending similar capabilities to customers on other data warehouses through a managed offering. Companies like Liveramp and Panther have already started embracing the phenomenon and many other companies are expected to follow suit.

The Emergence of Zero ETL on Hyperscaler Cloud Platforms to enable more workflows on the warehouse

Zero ETL is an approach to build data pipelines without traditional ETL processes and tools. The idea is to store, process, transfer, and analyze data within the source system and in its original format without requiring complex data transformations, manipulations or a lot of manual intervention. It will also ensure that data is up-to-date through continuous federation between connected business systems and processes. In 2023, more mission-critical tools will come with built-in zero ETL capabilities and sit directly on top of data warehouses.

Many data technology vendors are adding zero ETL to their offerings. For example, AWS now supports native Zero ETL integrations capabilities between

- Amazon Aurora relational database engine for the cloud, and Amazon Redshift petabyte-scale data warehouse in the cloud,

- Amazon Redshift and Apache Spark engine for large-scale data analytics.

Recently, Google also announced its zero-ETL analytics approach on data in Bigtable (a fully managed, NoSQL database) using BigQuery (a serverless, multi-cloud data warehouse).

- It enables users to directly query data in Bigtable via BigQuery without moving or copying data, so results are available faster and they can derive more useful insights from it for a range of use cases.

With these integrations, business users don’t have to build and maintain complex data pipelines and can perform real-time analytics and machine learning (ML) operations to unlock greater value from petabytes of data. While these offerings compete with the traditional ETL tools for integration setup, time to value, and cost of ownership, these are only optimized for single-cloud vendor ecosystems currently and teams with a multi-cloud strategy might still need to bring in external tooling.

2. Security and Privacy Discussions Will Take Centerstage Among Data-Conscious and Data-driven Companies

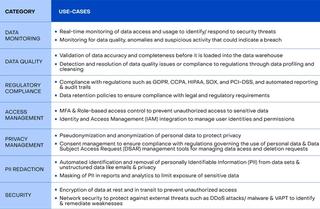

The increase in data being generated/ consumed, rise of interoperability of data across multiple platforms as well as new emerging use-cases call for better democratization of domain specific data to different personas within the modern organization. No longer will the technology or IT teams be the gatekeepers of data to ensure governance and there is an increasing requirement of self-serve access of data to the respective functional users in a secure and compliant manner.

Strong data governance means the organization has appropriate controls and safeguards in place to ensure high data observability, easy data discovery, and reliable data privacy and security. These controls will help to make the data more reliable and trustworthy for a wide range of business use cases. They will also play a role in ensuring that the entire data stack is compliant with applicable privacy regulations, whether it is GDPR, CCPA, HIPAA, or something else. The focus on compliance and the best practices will increase because non-compliance can directly affect companies’ toplines and reputations – as already evident from the crackdown on bigtech in the last few years for the “privacy debt” they’ve accumulated over the years. These laws and hefty financial consequences have changed how tech companies consider and protect consumer privacy.

We’re already seeing multiple data tooling categories emerge given that privacy and governance management cannot be overlooked as a ‘good-to-have’ factor and instead, is now table-stakes for any serious enterprise. Furthermore, with more than 70% nations announcing their consumer data protection acts, privacy-first product positioning will become table stakes for vendors supporting the modern data stack, and more of them will offer privacy protection features like inbuilt consent management and access governance controls for privacy-conscious organizations.

3. Real-time Machine Learning will become Mainstream for Time-sensitive Use- Cases like Fraud Detection and the Attention Economy

While machine learning (ML) is already deeply embedded in enterprise DNAs, there’s already signs of many organizations adopting real-time ML and analytics that will transform existing use cases and also create completely new ones.

- Real-time ML will enable quick responses in scenarios where user preferences change quickly in order to deliver enhanced customer experiences. It will be particularly useful in the attention economy. In eCommerce and social media, real-time ML models will provide personalized recommendations in response to users’ changing search queries or preferences, thus retaining their attention and converting it into tangible results such as higher average order values (AOVs).

- Real-time ML could also improve anomaly detection systems by continuously predicting anomalous behaviors and identifying fraud, cyberattacks, and other undesirable incidents. If left undetected, these incidents could cause massive damage to organizations and their stakeholders.

- That said, few organizations might grapple with the challenges and risks associated with real-time ML. One is the need for high-quality, real-time data. It can be hard to meet this need, especially when dealing with multiple sources since they provide data at different times and in different formats. Another challenge is that real-time ML requires high-performance computing resources to process large volumes of data efficiently and in real-time. Not all organizations have the resources or funds to perform such computationally-intensive tasks for their real-time ML applications.

In addition to challenges, there can be other risks associated with real-time ML, including model drift & performance, overfitting, and underfitting and it would be interesting to see new tools and frameworks emerge for both- data pipelines & model ops- in this rapidly evolving space.

Read more about our deep dive on real-time ML here.

4. Data Mesh Architectures Will Enable Greater Data Democratization and Scalability

In recent years, many development teams have transitioned from monolithic architectures and legacy SDLC models to more modern microservices architectures and the DevOps approach which brings greater agility and flexibility into the SDLC improves software security and reliability, and also speeds up product time-to-market.

A data mesh is like the modern data stack version of microservices and DevOps which essentially comprises of tooling & organizational processes for better implementation and usage of data and data tooling within an organization. It provides a decentralized data architecture that organizes data by business domains and allows users to take more ownership of their data and also collaborate with other domains. In addition, it enables them to set data governance policies, enables universal data interoperability, and observability, and eliminates the operational bottlenecks that are common with centralized, monolithic systems.

A data mesh decentralizes data ownership and management responsibility and ensures that data is more readily available to all parts of the organization. It thus eliminates many common data challenges for businesses.

- One is that a lot of their data exists in siloed format, creating bottlenecks that make it hard for them to leverage their data assets effectively.

- Another challenge is that many companies depend on data professionals to capture and analyze their data, which hinders non-technical and business users from deriving value from it for their specific use cases.

- Adopting a data mesh will enable organizations to create a more scalable data architecture and democratize data access, which will remove these common challenges. They will also be able to scale their data-driven initiatives and tap into new opportunities for data-driven growth.

Read more about the data-mesh architecture here.

That said, it will require a significant cultural shift as well as investments (in data governance & quality management) to move away from a centralized data management approach to a more decentralized approach like a Data Mesh. These shifts can be difficult to achieve, due to the inertia of large teams, siloed processes & poor inefficient organizational processes and the new-age tooling & robust frameworks for governance to ensure absolute compliance with the desired processes.

5. Data Stack Offerings Will Be Bundled to Simplify Adjacent & Similar Use-cases

As the number of data tools grows within an organization, teams will look to look for broader platforms supporting multiple complementary point use-cases to have further operational and tech spends efficiency- this is where consolidation will play a role.Consolidation is not a new trend in the tech industry, and the data stack is no exception. In recent years, we have seen an increasing number of mergers and acquisitions as companies seek to expand their capabilities and gain a competitive edge. These bundled offerings will enable organizations to do more with their data using the same platform, instead of having to add more tools to their stack for different purposes.

Bundling in The Data Integration Space (ETL and Reverse ETL)

Traditional data stacks are based on the ETL (Extract-Transform-Load) method of data integration where raw data is extracted from sources, transformed into a certain format, and loaded into a target database. While it helps users to do data analyses, they cannot activate the data, make it available to downstream business tools, or enrich it for use across departments. Here’s where reverse ETL (rETL) comes in. rETL can push data from data warehouses to multiple business tools like CRMs and advertising platforms so marketing, sales, and other teams can easily operationalize data and act on its insights quickly to benefit the organization.

Bundling ETL and rETL will enable warehouse-first companies to access a comprehensive solution that addresses their end-to-end data integration needs. This trend is expected to continue as businesses seek to streamline their data integration processes and simplify their data infrastructure.

- After establishing itself as a leading open-source ELT tool, Airbyte has now entered the rETL space with its acquisition of Grouparoo.

- Hevo Data also started with an ETL offering and has now launched an rETL offering.

Consolidation in behavioral analytics space (CDPs vs Product Analytics)

Consolidation is also accelerating among customer data platforms and product analytics platforms as in a way- both platforms capture user behavior data. In addition, both platforms have a significant overlap in terms of infrastructure capabilities and also have similar ideal customer profiles (ICP) as growth becomes a hybrid function of product and marketing. However, CDPs have traditionally focused on collecting and unifying customer data across multiple sources, while product analytics platforms have focused on analyzing user behaviors specifically to improve product performance.

Now, with the rise of first-party data, the overlap and convergence between these platforms are increasing even more. More CDPs are adding product analytics capabilities, while product analytics platforms are starting to collect and unify customer data.

- Amplitude recently announced its own CDP solution to complement its industry-leading PA solution. Unlike other CDPs that require connecting to third-party analytics solutions, Amplitude CDP will leverage its natively-integrated PA solution to both collect and analyze event data.

- mParticle, which previously focused only on CDP, has also moved into PA after acquiring Indicative.

As a result of such recent consolidations, the lines between CDPs and product analytics products are blurring, and we can expect to see further consolidation in this space after 2023. Gartner predicts that by 2023, the market for Customer Data Platforms (CDPs) will have consolidated by 70%- meaning both CDP and PA platforms will start moving upstream (infrastructure) as well as downstream (data activation). These two previously-disparate modules will work seamlessly with each other, and enable organizations to enhance the value of their data for data-driven decision-making and customer experience management.

6. Sharper positioning and targeted selling to specific data sub-functions for bottom up championing

The modern data stack has become more democratized and decentralized in recent years, thanks to the emergence of new tools and technologies that make it easier for a wider range of personas to participate in the data lifecycle within an enterprise. In the past, purchasing decisions for data tools and technologies were typically made by a small group of decision-makers, often limited to IT or data teams.

However, with the rise of PLG/ self-service analytics tools and cloud-based platforms, more personas within an enterprise are becoming involved in the data lifecycle. This includes business analysts, data scientists, CXOs, marketers, and other stakeholders who need access to data to make informed decisions. As a result, the buying and decision-making process for tools in the modern data stack has become more democratized, with a wider range of personas having a say in which tools are purchased and implemented.

In the current market scenario calling for financial and operational efficiencies, the ability to showcase the ROI will be key for any software vendor. Given the user and the buyer would be different specialized personas, it would be key to intricately understand and map the buying decision-makers and processes, and show relevant ROI for each aspect, given multiple stakeholders at each stage.

Conclusion

As per a survey by the Conference Board, 2023 is expected to be a gloomy year for markets around the world with almost 98% of CEOs preparing for a recession in the next 12 months. However, even with such tailwinds, global enterprise IT spending is still expected to increase to $4.66 billion in 2023 according to Gartner. Moreover, in a competitive landscape like this, more companies will try to leverage data as a key differentiator and will look for high-ROI solutions to overcome data-related challenges and tap into data-powered opportunities. Innovators that can satisfy these needs will zoom ahead in the race for data stack dominance.

While the above represents only a subset of the exciting trends we’re seeing in the data infrastructure and applications space, we expect further disruption within the space with generative AI and subML becoming mainstream. So if you’re a founder, operator, or an enthusiast in data, dev tools, infrastructure, or SaaS, building on the above trends or beyond, please reach out at akash@elevationcapital.com and/or anuraag@elevationcapital.com and we would love to host you for brainstorming, partnering, and more.

Related

Our New Fund: All In on India

Elevation Capital announces Fund VIII of $670 million, to accelerate partnering with category defining early stage companies in India

07.04.2022

Investing in PierSight

Building the Future of Global-Scale Earth Monitoring

12.01.2024

Resilience, Resurgence, Rebirth: ixigo's Journey

Aloke Bajpai and Rajnish Kumar, Co-founders, ixigo

24.06.2024